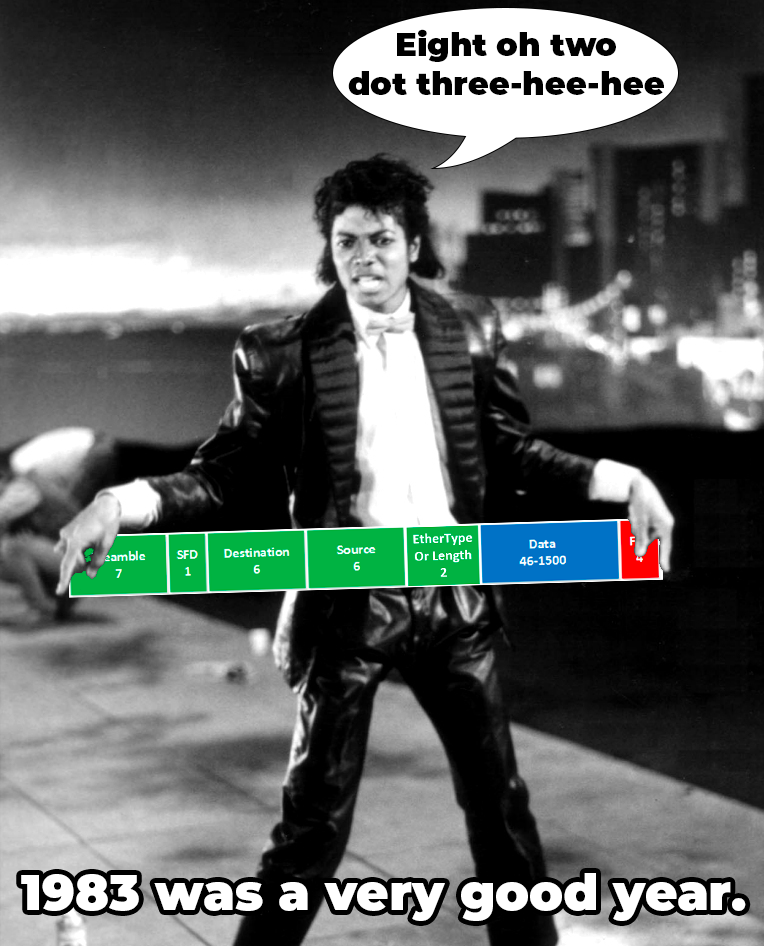

Truer words have never been spoken. Ethernet has proven to be one of the most successful and reliable technologies in history. Let’s have a quick history lesson for the youngsters.

Ethernet was first standardized by the IETF in 1983 for speeds up to 10 megabit (Mb). It supports all sorts of different physical layers including STP, Coax (who remembers vampire taps!), fiber optics, and more. It has gradually supported additional bandwidth standards, from 100Mbps up to today’s mind blowing 800Gb. Ethernet has served us well in connecting all sorts of devices in various network configurations for over 40 years.

But there have been a number of attempts on it’s life.

And in this corner…

One of the first big efforts to dethrone ethernet was ATM. Instead of providing larger packet sizes to accommodate the changing communications requirements of the late 90’s, ATM instead used 53 byte “cells” rather than larger packets. There was a direct correlation between cells lost in the backplane and cells lost in the engineer’s brain when troubleshooting. ATM could support 155Mb connections, which at the time was a HUGE amount of bandwidth, but it was soon eclipsed by commodity 100Mbps and 1Gbps ethernet. There are a number of technical and market reasons why ATM failed, but let’s just say that some vendors and customers lost HUGE by betting on this network protocol. This was a huge black eye for the strategic people in the CTO’s office.

Lesson One: Ethernet speeds are always increasing.

Very expensive toys

In 2005 Infiniband was a very promising technology having just hit the big time for enterprise use, previously it was almost a requirement for networking in HPC and supercomputing clusters. At first IB was extremely expensive, not just the switches but the NIC/HBAs were orders of magnitude more expensive. But IB was the first data center server connectivity that could do 40Gbps, so a lot of people incorrectly assumed that it was the future. IB could also do some interesting things like lossless fabric, and hyperconvergence for things like storage, but it never really took off outside of purpose built environments in the largest enterprises.

Lesson Two: Ethernet is suitable for 99% of networks.

Slow and steady wins the race

In the late 00’s, Cisco was unveiling a ton of cool new proprietary protocols like OTV and Fabricpath to help address some of the limitations of ethernet networks. While those technologies did have some limited success, Cisco really took their eye off the ball (ethernet economics) and allowed a new startup named Arista to enter the market. Arista has lately been putting a major dent in Cisco’s data center business, and that success has caused many new companies to enter the switching market. Using inexpensive ASICs that supported ethernet enhancements such as VXLAN, hardware ACLs, and enhancements to 2 QoS, these nimble vendors could provide the same capabilities as the Cisco proprietary protocols, without the notoriously expensive licenses.

Lesson Three: Ethernet is continuously enhanced to support the latest types of workloads.

But AI!

AI requires a large number of GPUs, and to utilize them effectively you need to connect them at very high speeds with very low and predictable latency. This typically entails building a purpose built fabric for the GPU servers to ensure that other traffic cannot interfere with the AI traffic sessions (see NCCL, RCCL, MPI).

There are currently three major options being discussed as an alternative to ethernet to satisfy AI requirements:

Infiniband – This is indeed a proven technology for a lossless fabric, a rich history of RDMA support, low latency message passing, and a variety of other features that are important for hyperconverged environments. Infiniband can support speeds above 400Gbps but 800+ is still way off into the future and will likely cost an arm and a leg. Infiniband solutions come at a variety of price points depending on which vendor you select.

CXL – This is a fairly new technology that aims to provide an extremely high bandwidth path direct to CPU or RAM on connected devices. This enables new features such as dense memory arrays, memory multicasting, and memory expansion for GPUs.

Optical Circuit Switching (Google) – At a certain point, all of these legacy protocols and intermediary chips and ASICs become cumbersome to building extremely large data center fabrics. This approach entails stripping down the physical network hardware to merely provide a programmable set of mirrors in a cabling plant or switch form factor. This results in an ultra low price point for 400 and 800Gbps, with the ability to go much faster in future releases. The downside is that the connectivity is entirely point to point, and positioning the mirrors can take up to 2 seconds which is a lifetime in traditional networking. In order to make use of this, you need to know precisely what data you need, and when you will need it. It’s not realistic for front end or application servers. But if you need to get multiple terabytes into the memory of thousands of servers or GPUs to deal with “flash” AI traffic demands, this will do it without swamping the rest of the network. It’s a very interesting concept that is likely unsuitable for 99% of IT environments.

What is best?

Depending on who you ask you’re going to get different answers. Ask your GPU vendor and they’ll probably want to pitch you on something proprietary that they know works well, and costs a lot. Ask a popular networking company and they’ll push you to consider a platform that uses their latest proprietary chips. Ask an AI software engineer and they’ll probably start drooling about some new feature that is only available on some brand new box. Guess what, they’re all right in their own ways.

A quick summary

- Ethernet speeds are always increasing. The roadmap goes well into the future and 800Gbps is a tremendous amount of bandwidth that should satisfy apps for the next few years.

- Ethernet is suitable for 99% of networks. If you don’t specifically know that you need a specific new technology, stick to something you know well.

- Ethernet has been continually enhanced to support the latest types of workloads. New features like RoCE and DCQCN offer services that make ethernet even more performant for the most demanding apps. Also, check out Ultra Ethernet for a glimpse of the future.

No one knows what the future holds for networking. These new technologies are very cool and will definitely provide some performance improvements. However, this simple review of some of ethernet’s vanquished foes should make it apparent that you

NEVER BET AGAINST ETHERNET.

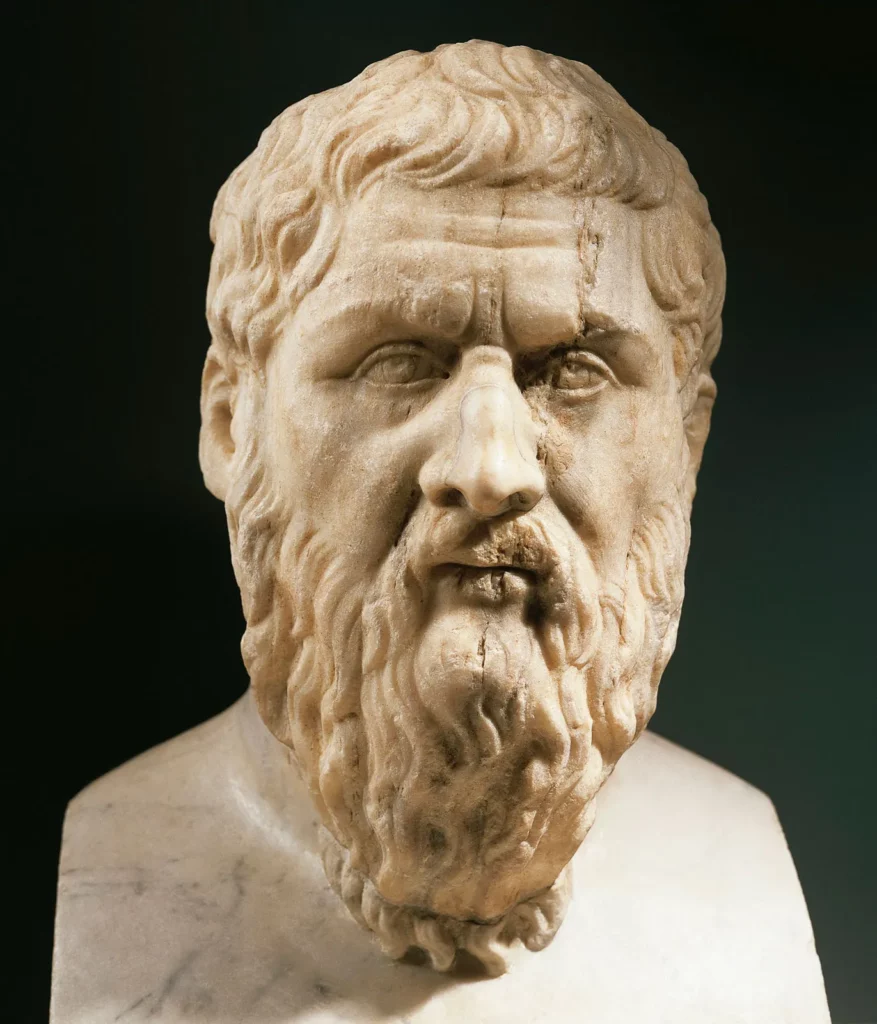

“The default username is cisco. The default password is cisco.”

– Plato

0 Comments